Why Ensuring Transparency and Explainability in Generative AI Matter for Enterprises

Why Transparent Generative AI Matters

Generative AI, especially Large Language Models (LLMs), is rapidly reshaping the business landscape, promising everything from enhanced customer experiences to operational efficiency gains. Yet enterprise leaders often find themselves at a crossroads—on the one hand, there is the allure of quick wins and competitive advantages; on the other, the pitfalls of opaque AI systems that can produce biased or untraceable outputs. Organizations want advanced AI capabilities, but the primary challenge is building trust in complex machine-learning processes that can feel like “black boxes.”

As a trusted advisor in Data & AI, I have seen how this tension leaves leaders asking: “How can I ensure my AI system’s decisions are fair, reliable, and justifiable to stakeholders?” And, “How can I feel confident that integrating an LLM will not expose us to ethical or regulatory concerns?” This drive toward clarity is not just about altruism—it is tied to real ROI. Indeed, only 23% of companies plan to deploy commercial models due to privacy and ethical concerns [1], illustrating how transparency is not a luxury but a necessity.

In this post, we’ll explore the core challenges of transparency, review industry-leading strategies, dive into real-world examples, and map out practical steps for adopting explainable, trustworthy Generative AI across your organization.

1. The Obscurity Dilemma: Key Challenges with Transparency and Explainability

1.1 Opacity and Stakeholder Mistrust

Complex AI models, particularly LLMs with billions of parameters, frequently operate as “black boxes.” While they can generate coherent text, identify patterns, and make predictions, stakeholders often lack clarity regarding why or how these outputs materialize. This opacity can fuel skepticism and limit buy-in from decision-makers who need assurance that AI-generated outcomes can be justified. According to recent data, 67% of customers expect AI models to be free from prejudice, and 71% expect these systems to clearly explain their results [2]. Without robust transparency measures, organizations risk eroding trust among customers, employees, and regulators.

1.2 Privacy and Ethical Constraints

Generative AI depends on vast amounts of data, which naturally triggers privacy concerns. When LLMs ingest sensitive information, enterprises need to explain—and prove—that personal data is handled responsibly. Only 23% of companies plan to deploy commercial models due to privacy and ethical concerns [1], underlining how a lack of AI transparency can derail adoption. An additional complexity emerges in highly regulated fields (e.g., healthcare and finance), where AI outputs must comply with stringent rules. Failure to provide clear explanations can result in regulatory penalties and significant reputational damage.

1.3 Model Bias and Fairness

When LLMs learn from biased data, they are likely to reproduce those biases in their outputs. This risk can translate into discriminatory practices—whether in hiring, lending, or content recommendation. Addressing bias requires more than simply removing sensitive data fields; it demands robust transparency and explainable workflows. In fact, 67% of organizations are utilizing Generative AI products that rely on LLMs, yet 58% are still in the experimental phase [1]. Many remain hesitant to scale precisely because they fear the unknown biases lurking under the hood.

1.4 Regulatory Pressures

Regulators worldwide are pushing for heightened AI oversight. The EU’s AI Act, for example, imposes stringent transparency and explainability obligations, particularly for high-risk AI systems. Non-compliance can lead to significant fines, in some cases higher than those associated with the GDPR. Enterprises are thus under growing pressure to institute robust transparency frameworks—or face the consequences. Beyond statutory obligations, the question of brand integrity looms large. Organizational leaders cannot afford to appear cavalier about AI’s ethical footprint or its impact on end-users.

2. From Dark to Light: Strategic Insights for Transparent and Explainable AI

2.1 Embrace a Holistic Governance Model

Effective transparency begins with governance. Many businesses are investing in cross-functional AI governance committees to address everything from data quality to legal compliance. A balanced approach ensures that each department—IT, data science, legal, and operations—has a role in shaping policies around data usage, permissible model behaviors, and ongoing auditing. IBM’s AI governance frameworks, such as watsonx.governance, highlight the need for controlling and managing Generative AI models across platforms to ensure compliance with data protection regulations [3]. This approach positions transparency as a shared organizational responsibility rather than a narrow technical add-on.

2.2 Implement Explainability Techniques

Explainable AI (XAI) is increasingly seen as a requirement for enterprises deploying Generative AI. LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (SHapley Additive exPlanations) are examples of widely used tools that break down how specific input factors lead to certain outputs. These methods help companies pinpoint potentially discriminatory patterns. They also enable data scientists to tweak models for better alignment with ethical guidelines. Indeed, 67% of enterprises now employ at least one explainability technique in their AI systems, illustrating the growing acceptance of explainability tools [4].

2.3 Watermarking and Content Provenance

Ensuring authenticity in AI-generated content goes hand in hand with transparency. Google, for instance, has integrated a watermarking technology called SynthID that embeds a subtle digital signature into AI-generated content, recognizable even after alterations [5]. This measure combats misinformation and unauthorized use of AI outputs. Additionally, implementing or supporting cross-industry provenance standards—like the Coalition for Content Provenance and Authenticity (C2PA)—helps enterprises maintain the traceability of content from creation to consumption, bridging the transparency gap for end-users who want to verify the source.

2.4 Comply with Regulatory Frameworks

A proactive approach to regulation can serve as a competitive advantage. As laws such as the EU’s AI Act ramp up compliance demands, enterprises must plan for internal controls that anticipate these regulations, from model risk assessments to robust documentation protocols. Meeting these requirements not only shields organizations from costly fines but also bolsters brand reputation. A survey from Deloitte revealed that 89% of C-level leaders believe ethical governance structures support innovation [6]. Forward-thinking organizations see compliance as an enabler of trust-driven market differentiation rather than an impediment to progress.

3. Approaches by Major Companies

3.1 IBM: Beyond Black Boxes

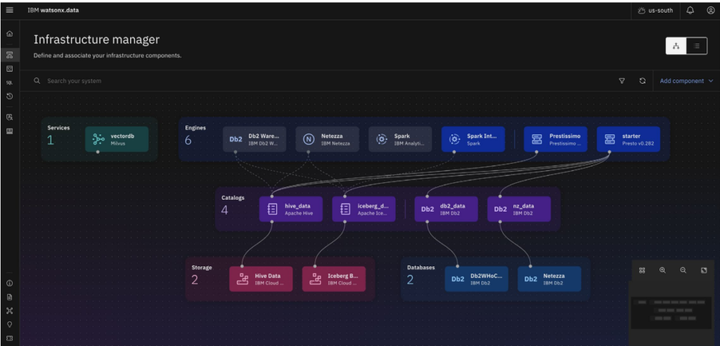

IBM has taken a structured route to tackle explainability. The IBM watsonx platform integrates AI algorithms that prioritize trustworthiness and compliance [7], and it includes AI Explainability 360, an open-source toolkit providing a suite of algorithms that clarify how AI arrives at decisions. On top of that, IBM’s “gen AI data ingestion factory” ensures enterprise data is prepped with governance and compliance in mind before feeding it into Generative AI solutions [8]. By emphasizing fairness, clarity, and robust security measures, IBM underscores the principle that trust is at the core of AI adoption.

Notably, IBM’s Granite large language model has emerged as one of the most transparent LLMs in the industry. According to the second Foundation Model Transparency Index (FMTI) released by Stanford University’s Center for Research on Foundation Models, IBM Granite scored a perfect 100% in multiple categories designed to measure how open models truly are [21]. IBM further demonstrated its commitment to openness by recently open-sourcing its Granite code models, enabling a broader community to inspect and understand the algorithms, datasets, and processes driving these systems. This achievement reinforces IBM’s philosophy that AI innovation should go hand in hand with transparency, helping ensure that advanced models benefit people and enterprises in an equitable, explainable manner.

3.2 Google: Evaluating and Watermarking Models

Google’s Vertex Gen AI Evaluation Service systematically evaluates AI-generated responses for quality, consistency, and fairness, further refining or filtering outputs [9]. Additionally, the introduction of invisible watermarks in AI-generated content addresses authenticity, especially for critical use cases like journalistic or financial data. Google’s Responsible Generative AI Toolkit also provides features for prompt refining, debugging, and watermarking, promoting more consistent and transparent model behavior [5]. This dual emphasis on evaluation and provenance elevates user trust by demonstrating that Google invests in a lifecycle approach to AI transparency.

3.3 Amazon: Governance and Safe AI Deployment

Amazon fosters an ecosystem for Generative AI through AWS, focusing heavily on governance. Amazon’s Titan family of foundation models is engineered to detect and remove harmful content, thereby mitigating reputational and legal risks for organizations deploying such models [10]. Alongside hardware optimizations—like AWS Trainium and Inferentia chips—Amazon aims to bolster both performance and safety in AI services. Moreover, Amazon’s “AI governance framework” provides guidance on data usage, model oversight, and ongoing auditing, supporting compliance throughout the model lifecycle. Partnerships—like Amazon’s collaboration with Anthropic—demonstrate a commitment to forging alliances that reinforce safe AI deployment [11].

Adding to this collaborative momentum, Amazon and IBM recently announced a partnership to scale responsible Generative AI for enterprise customers [22]. By combining IBM’s open-source technology and Granite models—which are built for business and scored a perfect 100% in multiple transparency categories—and AWS’s robust infrastructure services, the two tech giants aim to offer a transparent, secure, and trust-oriented AI ecosystem. As part of this effort, IBM Granite models are now available on both Amazon Bedrock and Amazon SageMaker JumpStart, with additional integrations to streamline governance, security, and observability across the entire AI lifecycle. IBM watsonx.governance is also set to provide a direct, customizable user experience within Amazon SageMaker, enabling risk assessments and model approvals to be handled in one unified workflow. This partnership illustrates how Amazon’s commitment to safe AI extends beyond its own solutions, supporting a broader, cross-platform vision of responsible innovation.

3.4 Microsoft: Operationalizing Transparency

Microsoft emphasizes “human-centered transparency” by providing stakeholders with clear insights into AI operations. Microsoft’s Responsible AI Standard calls for thorough documentation and accountability checks, aligning well with modern regulatory standards [12]. The company’s Generative AI Operations (GenAIOps) framework offers a structured way to manage the operational complexities of building, testing, and deploying LLMs at scale. This approach was successfully utilized by fashion retailer ASOS, illustrating how structured operational guidelines can streamline AI workflows [13]. By embedding pre-trained models like Meta’s Llama 2 into Azure AI, Microsoft underscores that robust model documentation and user education are critical elements in an end-to-end AI strategy.

3.5 OpenAI: Structured Transparency

OpenAI focuses on robust transparency frameworks, evidenced by a 9-dimensional, 3-level analytical model for clarifying potential limitations and downstream usage of LLMs [14]. The GPT Model Specification outlines objectives, rules, and defaults, functioning as a blueprint for ethical AI behavior [15]. By actively researching explainable generative AI (GenXAI), OpenAI underscores that advanced techniques—such as multistep reasoning and external knowledge integration—are integral for safe, reliable user interactions [16]. This layered approach mirrors industry best practices, cementing the notion that transparency is not a one-size-fits-all solution but an evolving set of guidelines and standards.

4. Real-World Case Studies and Examples

4.1 BNP Paribas and Mistral AI

BNP Paribas, a leading global bank, has partnered with Mistral AI to leverage LLMs for customer support, sales, and IT [17]. Operating in one of the most heavily regulated industries, BNP Paribas required an AI solution that did not compromise on transparency. By implementing Mistral AI’s specialized LLMs, the bank improved customer responsiveness and automated key steps in IT processes. Rigorous transparency metrics were used at each stage, ensuring that outputs complied with both internal guidelines and external regulations. This use case illustrates how even risk-averse institutions can embrace Generative AI by prioritizing clarity and ethical considerations.

4.2 Tommy Hilfiger’s AI-Powered Design Assistants

Tommy Hilfiger, an iconic fashion brand, uses IBM’s AI platform to design assistants to streamline the creative process. By analyzing extensive customer data and predicting upcoming style trends, these assistants provide real-time suggestions to designers. Crucially, Tommy Hilfiger employs frameworks that highlight the data sources and logic behind each design recommendation [18]. This ensures that creative teams do not view AI suggestions as a magic box. The brand also capitalizes on the technology to forecast what consumers want, driving innovation while maintaining transparency about how decisions are made, thereby strengthening stakeholder trust in AI-driven design.

4.3 Adobe Firefly: Sharing Training Data Sources

Adobe’s Firefly toolset exemplifies a commitment to transparency, openly sharing training data sources so users can understand the provenance of the generated images [19]. This stands in contrast to other models that disclose little about the datasets fueling their capabilities. By clearly detailing which images the algorithm references during training, Adobe avoids potential legal and ethical snags. For design-intensive industries, this type of traceability is a game-changer, as it alleviates concerns about inadvertently plagiarizing or infringing on copyrighted materials.

4.4 GitLab Duo for Developer Productivity

GitLab Duo integrates Generative AI to enhance developer productivity across the software development lifecycle [20]. This integration was paired with robust documentation outlining how the AI suggestions are generated and how sensitive code is handled. As developers rely on the system for automated tests and code completion, GitLab offers logs and audits detailing how certain features were suggested or flagged. By baking transparency into the developer workflow, GitLab ensures a healthy balance between efficiency and accountability.

5. Actionable Recommendations for Enterprise Leaders

5.1 Start with a Clear Governance Framework

Begin by establishing a cross-functional AI governance board encompassing data scientists, compliance officers, business unit leaders, and legal experts. Task this board with developing robust transparency policies covering data handling, usage guidelines, and model lifecycle management. Outline the steps necessary for the continuous monitoring of AI systems, ensuring that transparency is not just a milestone but a sustained practice.

5.2 Adopt Standardized Toolkits and Protocols

Select recognized explainability and traceability tools—such as LIME, SHAP, or IBM’s AI Explainability 360—tailored to your organization’s technology stack. These solutions offer tangible methods for identifying bias and clarifying how models arrive at predictions. Additionally, consider adopting watermarking and provenance standards like C2PA or Google’s SynthID for authenticating AI-generated content. This is particularly relevant for organizations that produce marketing or digital media assets, as watermarked content helps mitigate the spread of misinformation.

5.3 Invest in Workforce Training

A workforce that understands the fundamentals of Generative AI and transparency best practices is less likely to misuse the technology. Invest in training sessions that cover not only technical skills but also the ethical and legal facets of AI. Remember that 89% of C-level executives surveyed feel that ethical governance structures support innovation [6]. Empowering your teams with the right knowledge fosters a culture of responsibility and encourages employees to raise red flags when they spot potential transparency gaps.

5.4 Plan for Regulatory Compliance Early

Craft roadmaps that align with emerging regulations, such as the EU AI Act. Even if your organization currently operates primarily in regions with less stringent rules, adopting best practices prepares you for future expansion or evolving legislative environments. This involves setting up robust documentation processes that capture training data sources, model versions, performance metrics, and any modifications over time. Doing so, positions you to swiftly generate proof of compliance when regulators or clients request it.

5.5 Integrate Transparency into Product Design

Rather than layering explainability features after the fact, embed transparency considerations into every stage of product or service development. During the earliest phases—model selection, data cleansing, or feature engineering—ask how you will explain each component to stakeholders. Some companies, such as Tommy Hilfiger, have integrated these considerations from design ideation onward [18]. Consider micro-explanations in user interfaces that clarify how AI arrived at a recommendation, which can greatly enhance user confidence, especially in high-stakes applications like healthcare or finance.

5.6 Secure the Endpoints

No transparency strategy is complete without robust security measures. Generative AI systems must be protected from data breaches and malicious manipulation of training data—an attack vector that could degrade or corrupt model outputs. Scrutinize your supply chain of data and the integrity of open-source libraries used. By ensuring robust security, you preserve the credibility of any transparent or explainable AI outcomes you present to users.

5.7 Conduct Periodic Audits and Stress Tests

Transparency is not a static achievement; it is an ongoing process. Schedule periodic audits that review your AI models’ outputs for bias, drift, or compliance misalignments. Stress-test the system with edge cases to understand how it handles unusual or sensitive scenarios. Engage internal stakeholders and, if feasible, external experts to validate whether your transparency measures remain effective. These audits should produce clear, actionable reports that can be shared across departments for continuous improvement.

Ensuring Clarity in AI Adoption

In essence, Generative AI’s promise of innovation is inseparable from the urgent need for transparency and explainability. As leaders look to harness LLMs for competitive advantages—whether in product design, workflow automation, or data-driven decision-making—the requirement to demystify these sophisticated tools becomes paramount. By understanding the root challenges—ranging from privacy and ethical concerns to regulatory pressures—and adopting strategic governance frameworks, explainability toolkits, and robust training programs, organizations can confidently deploy advanced AI while preserving trust.

Transparency closes the gap between potential AI-driven breakthroughs and stakeholder acceptance. It reassures end-users that the system is fair and validates that the enterprise can meet regulatory and ethical requirements. Ultimately, embracing transparency and explainability transforms Generative AI from a curiosity or a high-risk venture into a reliable ally for sustainable success. The outcome? AI that feels less like an enigma and more like a trusted, fully integrated partner in driving your business forward.

Continuing the Conversation:

Ready to take the next step in embedding transparency and explainability into your AI strategy? Subscribe to my newsletter to stay connected with the latest frameworks, open-source tools, and best practices that will keep your enterprise at the forefront of trustworthy Generative AI innovation. Let’s continue charting a clear and confident path toward AI excellence—together.

References

[1] https://springsapps.com/knowledge/large-language-model-statistics-and-numbers-2024

[2] https://enterprisersproject.com/article/2020/10/artificial-intelligence-ai-ethics-14-statistics

[3] https://www.ibm.com/thought-leadership/institute-business-value/en-us/report/ai-governance

[4] https://www.forrester.com/report/the-state-of-explainable-ai-2024/RES180504

[5] https://deepmind.google/technologies/synthid/

[6] https://www.aiwire.net/2024/08/07/deloitte-study-reveals-c-level-executives-commitment-to-ethical-ai-frameworks/

[7] https://medium.com/@sabine_vdl/ibm-ai-for-business-10-steps-where-watsonx-creates-competitive-advantage-through-trustworthy-ai-cfd0696967b6

[8] https://www.ibm.com/think/topics/generative-ai-for-data-management

[9] https://cloud.google.com/blog/products/ai-machine-learning/enhancing-llm-quality-and-interpretability-with-the-vertex-gen-ai-evaluation-service

[10] https://www.aboutamazon.com/news/company-news/amazon-responsible-ai

[11] https://www.anthropic.com/news/anthropic-amazon

[12] https://techcommunity.microsoft.com/blog/machinelearningblog/deploy-large-language-models-responsibly-with-azure-ai/3876792

[13] https://techcommunity.microsoft.com/discussions/azure-ai-services/exploring-the-future-of-generative-ai-within-microsoft-technologies/4287275

[14] https://ojs.aaai.org/index.php/AIES/article/view/31757

[15] https://medium.com/@steven.mei0814/decoding-openais-gpt-model-specification-a-roadmap-for-responsible-ai-development-36e0db846b55

[16] https://dl.acm.org/doi/fullHtml/10.1145/3613905.3638184

[17] https://www.qorusglobal.com/content/28766-bnp-paribas-and-mistral-ai-forge-multi-year-partnership-to-enhance-banking-services

[18] https://www.calibraint.com/blog/applications-of-generative-ai-industries

[19] https://www.forbes.com/sites/bernardmarr/2024/05/17/examples-that-illustrate-why-transparency-is-crucial-in-ai/

[20] https://builtin.com/articles/innovating-sync-story-gitlab-duo

[21] https://research.ibm.com/blog/ibm-granite-transparency-fmti

[22] https://newsroom.ibm.com/blog-ibm-and-aws-accelerate-partnership-to-scale-responsible-generative-ai