My Key Takeaways from the Gartner Data & Analytics Summit 2025: AI Governance – Designing an Effective AI Governance Operating Model

Artificial intelligence continues to redefine the way businesses operate, opening doors to innovative products and services while raising complex new questions around accountability, ethics, compliance, and trust. At the recent Gartner Data & Analytics Summit 2025, I attended a particularly illuminating session titled “AI Governance: Design an Effective AI Governance Operating Model.” The insights I gathered there vividly portrayed the importance of AI governance—and how it’s evolving from a checkbox approach into a central pillar for organizations seeking to responsibly scale their AI initiatives.

Below are my key takeaways and reflections from that session, structured to give you a clear sense of why AI governance matters, how to implement it, and what it might look like in action.

1. An Anecdote That Says It All: Apple Card and Steve Wozniak

The speaker opened the session with a story about the Apple Card—a credit card launched by Apple in partnership with Goldman Sachs—and how it sparked controversy when Steve Wozniak, Apple’s co-founder, discovered that his wife was offered a lower credit limit than he was, despite their shared assets and marital status. In the ensuing uproar, the explanation from support was effectively: “It’s not us, it’s the algorithm.”

While Goldman Sachs was eventually able to produce documentation showing no explicit intent to discriminate, Apple was left to deal with a public relations fallout over the lack of transparency around its algorithmic decisions. There were congressional inquiries and lawsuits alleging discrimination, demonstrating how an AI-driven decision (in this case, credit limits) could quickly spiral into a broader crisis when governance structures around fairness and transparency are not proactively addressed.

This story set the tone for the entire session: AI governance isn’t just a technical imperative; it’s a strategic, ethical, and reputational one. Organizations are increasingly accountable for how AI systems make decisions, which means a clearly defined set of principles, policies, and oversight structures is critical for reducing the risk of unintended harm, not to mention legal repercussions and public backlash.

2. Defining AI Governance

The session provided a succinct definition of AI governance that resonated with many in the audience:

“AI governance is the process of creating policies, assigning decision rights, and ensuring organizational accountability for balancing both the risks and the investments associated with AI.”

Importantly, governance must not focus solely on risk. The speaker emphasized that value creation is equally important. In other words, AI governance is about making sure you realize the full benefits of AI—driving efficiency, innovation, competitive differentiation—while also ensuring that risks are well understood, mitigated, and continuously monitored.

To break it down:

- Policy Creation: Establishing guidelines and frameworks for how AI should be developed, deployed, and used.

- Decision Rights: Clarifying who gets to make what calls when it comes to AI deployment, risk escalation, and strategic direction.

- Accountability: Ensuring there is a system in place to hold teams and individuals responsible for any AI-driven outcomes that affect customers, employees, or society at large.

3. Focus on the Portfolio of Use Cases, Not Theory

One of the biggest challenges with “AI governance” as an abstract concept is that it can quickly become overwhelming. The speaker suggested a pragmatic approach: start with specific use cases in your current AI portfolio.

By anchoring your governance framework to concrete use cases, you avoid:

- Over-investing in processes that might never see real-world application.

- Delaying the launch or scaling of beneficial AI solutions because you’re stuck in theoretical compliance exercises.

- Failing to discover the real gaps and issues that only surface when technology meets data, people, and real operational processes.

Why 3–6 Use Cases Work Best

The recommendation was to select three to six AI use cases that share data dependencies and/or stakeholder groups. That way, you can focus governance efforts on a manageable slice of your broader AI ambitions. If these use cases succeed (or fail) during pilot and then production phases, they will illuminate how best to refine policies, oversight mechanisms, and organizational roles.

If you pick only a single use case, you run the risk of iterating endlessly without a clear sense of whether the problem might lie in the AI model itself, data quality, user adoption, or something else entirely. If you pick too many, you can spread your governance oversight too thin and end up with incomplete or inconsistent practices.

4. Building on Existing Governance Structures

A recurring theme was the importance of aligning AI governance with existing governance processes, especially data governance. Many organizations have spent years creating a robust data governance framework—complete with policies for data quality, privacy, access controls, and lifecycle management. AI governance can often be an extension or refinement of these existing structures:

- Use Existing Councils or Committees: If your enterprise already has a data governance council or risk management steering committee, embed AI-specific responsibilities within them.

- Mirror Familiar Processes: Employees are more likely to adopt new procedures if they mirror old ones. For example, if your organization has standard operating procedures for data audits, you can adapt them to AI model audits.

- Limit Policy Proliferation: Rather than writing an entirely new suite of policies from scratch, see if existing data governance policies can be modified to address AI-specific issues like algorithmic bias, model drift, or transparency in decision outputs.

The session provided the example of Fidelity Investments, which implemented model governance “on top of” existing risk and data governance activities. This approach yielded more trust and better decision-making around AI precisely because it felt familiar to employees used to data governance standards.

5. Organizational Structures: Central vs. Decentralized

Several slides focused on how to structure governance teams. The “textbook” layout for AI governance usually involves:

- A Management Board or Steering Committee at the enterprise level.

- An AI Governance Council that focuses on strategic decisions—balancing risk, compliance, and investments.

- An AI Working Group that tackles day-to-day tasks such as policy writing, technical reviews, training, and so forth.

Additionally, in highly technical organizations, you might see an AI Technical Review Committee composed of expert data scientists, architects, and engineers who can vet models for accuracy, bias, or compliance before they are deployed.

Centralized vs. Hybrid (Hub-and-Spoke) Models

The speaker noted a shift toward centralized governance in many organizations, particularly as interest in generative AI grows. Centralization helps you move faster because best practices and standards can be developed by a small, specialized group and then applied organization-wide. Over time, some might pivot to a “hub-and-spoke” model: a core AI Center of Excellence sets standards, while decentralized teams (“spokes”) handle domain-specific use cases with local stewardship.

Why centralized first? Because generative AI, large language models, or advanced machine learning typically require specialized knowledge in bias detection, data privacy, regulatory compliance, and more. Centralizing that knowledge can prevent missteps, reduce duplicated effort, and ensure a uniform level of quality and consistency.

6. Diversity of Stakeholders: An Absolute Must

One of the most impactful points was the necessity of having a diverse set of stakeholders involved in AI governance. This means:

- Compliance and Legal Teams: Ensure the organization adheres to local and international regulations (e.g., the emerging EU Artificial Intelligence Act) and that contractual obligations (especially regarding third-party data or vendor-supplied AI models) are properly enforced.

- Procurement: Help clarify responsibilities for vendor-provided models or solutions—what the vendor must disclose about the AI’s data usage or algorithmic approach, for instance.

- Audit and Risk: Provide objective, third-party evaluations of how AI is affecting company risk profiles and whether internal controls (like Model Risk Management in banking) are effective.

- Security: Oversee how AI systems handle sensitive data, ensure robust identity and access management, and monitor potential adversarial attacks on ML models.

- User Representatives (Customers, Employees, and sometimes Patients or Citizens in Healthcare): Make sure AI solutions actually address user needs and don’t inadvertently harm or mislead them.

The result of weaving this stakeholder tapestry together is not just “compliance” for its own sake—it’s a system of checks and balances where multiple voices have the power to speak up if they see a problem. This is how you catch issues like hidden biases or operational risks before they metastasize into major crises.

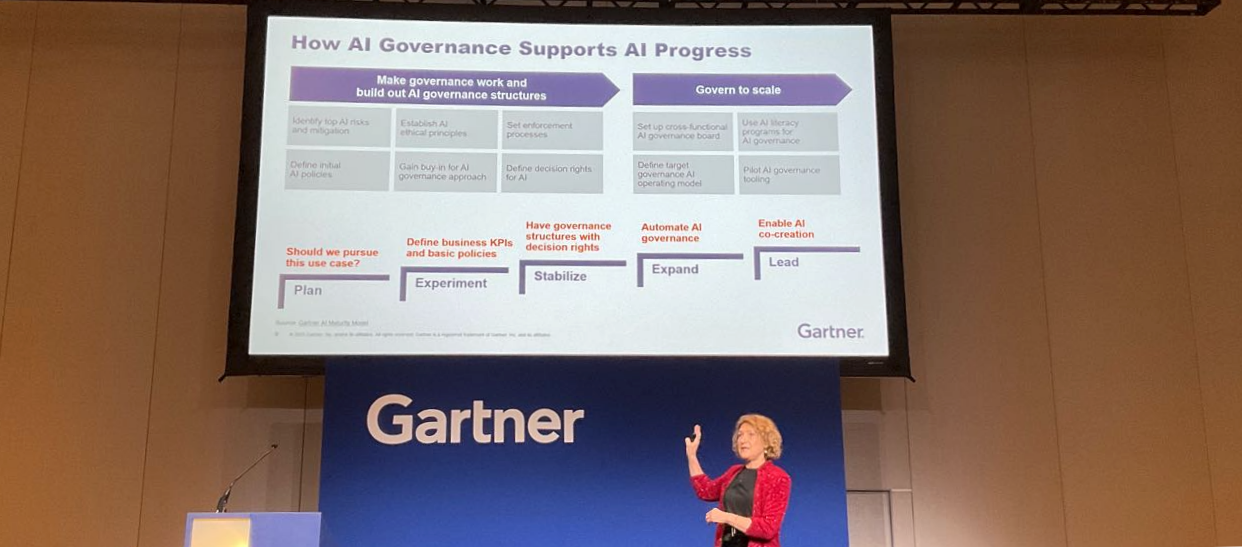

7. Scaling Governance: From Pilot to Enterprise-Wide

The session highlighted how AI governance might start “light” when the organization is in an experimental or proof-of-concept stage. Once you prove out a few AI use cases, you start looking at how to scale. Here’s where robust governance is essential—because scaling means more models, more data, more user interactions, and, inevitably, more risk.

Technical Governance and Automation

Technical governance becomes increasingly important as you scale. This includes:

- Model Management Repositories: Where you securely store all versions of your models, track changes, and capture metadata about training data, hyperparameters, accuracy metrics, and known limitations.

- Automated Monitoring: Tools that watch for model drift (when real-world data diverges from the data on which the model was trained), unusual spikes or dips in key metrics, or potential anomalies in data inputs.

- Integrated Compliance Checks: Automated scans that ensure models meet minimum thresholds for fairness, accuracy, or explainability before they are deployed—or that re-check these thresholds at regular intervals.

By combining these technical tools with clear policy guidelines, organizations like Fidelity Investments or Merck have demonstrated that governance need not be a hindrance; in fact, it becomes the enabler for responsibly scaling AI across various lines of business.

8. Handling Regulatory Complexity and Rapid Change

Another major theme was the fluidity of the regulatory environment for AI. From local U.S. jurisdictions to national-level guidelines to the EU AI Act, regulations are evolving rapidly—and they often conflict. Even within a single country, you might see multiple draft bills or executive orders that address AI from different angles (privacy, bias, consumer protection, etc.).

In the session, it was noted that tracking at least 700 different local regulations around AI in the U.S. alone was not uncommon for large companies. This can be paralyzing, but the recommended approach is:

- Understand Core Principles: Fairness, transparency, accountability, and data privacy typically form the backbone of most AI regulations. If your governance structures honor these principles, you’re more likely to comply—even if specifics differ.

- Monitor Industry-Specific Requirements: Healthcare, finance, and insurance industries often have additional or more stringent regulations. Model Risk Management is well-established in banking, for example, and can serve as a blueprint for other industries.

- Stay Flexible: Build governance that can adapt. Today’s tools, best practices, or compliance “hot topics” might be different from tomorrow’s. Keep your AI council or working group engaged in continual learning and regulatory scanning.

9. Risk Classification of AI Use Cases

A critical insight: not all AI use cases carry the same risk. By classifying use cases into low, medium, or high risk, you can apply different levels of governance rigor.

- Low-Risk Use Cases: Might include simple internal operational tools or analytics that only inform a small internal team’s decisions. If an error occurs, it’s unlikely to cause major legal, financial, or reputational damage.

- High-Risk Use Cases: Could involve consumer-facing functions (credit approvals, hiring decisions, healthcare diagnoses, etc.) where a wrong decision can severely impact individuals’ lives and open the organization to liability.

The session also mentioned “prohibited” categories in certain jurisdictions. Under the new EU AI Act, for instance, AI systems used in certain manipulative or exploitative ways could be outright banned. By pre-classifying these categories within your governance framework, you streamline decision-making about which projects should not move forward at all.

10. The 3x3 Matrix: Trust, Transparency, Diversity

One of the more visual takeaways was the 3x3 matrix centering on three key pillars:

- Trust

- Transparency

- Diversity

…applied to three components:

- People

- Data

- Algorithms

When you map trust, transparency, and diversity across each of these dimensions, you quickly see how multi-faceted AI governance needs to be:

- Trust in People: Do your data scientists, compliance teams, and business stakeholders trust one another? Are there strong communication channels for voicing concerns or suggestions?

- Trust in Data: Do you have confidence that the data used for model training and inference is accurate, up-to-date, and representative?

- Trust in Algorithms: Is the model itself robust, stable, and tested against real-world scenarios and edge cases?

Likewise, each dimension must also be transparent. For example, can you explain how a recommendation system arrived at a given output to the end user or to a regulator? Finally, diversity demands you involve a wide range of perspectives: domain experts, legal experts, different demographic groups, and individuals who might detect biases or blind spots.

11. Balancing Risks vs. Value

Throughout the session, speakers reminded us that it’s easy to get caught up in risk mitigation, especially with so many headline-grabbing examples of AI failures. Yet AI also holds tremendous potential to increase revenue, reduce costs, and enable new services. Governance should enable that value creation by providing clarity and guardrails.

- Manage Risk with Context: If a model’s error margin is tolerable for a marketing campaign but not for clinical decision-making, governance should reflect that distinction.

- Communicate Early and Often: If you’re implementing an AI-based HR screening tool, for example, ensure potential hires understand how the system works, and provide them with the opportunity to clarify or challenge decisions.

- Set Time Frames for Iteration: AI projects can drift into endless “tinkering.” Part of governance is deciding how long you’ll iterate before you either deploy the model or shelve it. Unproductive use cases should not monopolize valuable resources if the business case no longer holds.

12. Final Thoughts and Recommendations

Here are some practical takeaways the speaker left with us that resonated:

- Anchor AI Governance on a Manageable Portfolio of Use Cases

- Start small with three to six use cases that share data dependencies, so you can build good governance “muscle memory” without boiling the ocean.

- Focus on meeting real business needs and measuring success in terms of value generated and risk mitigated.

- Align to Existing Governance Structures

- Leverage or mirror your existing data governance or compliance frameworks rather than reinventing the wheel.

- Ensure your new AI governance groups or committees integrate seamlessly with established risk, audit, or steering committees.

- Differentiate by Risk Level

- Develop a clear, consistent method for classifying AI initiatives by risk (low, medium, high) or by prohibited categories.

- Use this classification to decide how much scrutiny or compliance overhead each use case requires.

- Involve Diverse Stakeholders

- AI governance is inherently cross-functional. Engage compliance, legal, security, procurement, risk, and domain-specific experts (like marketing, healthcare, HR) from the very start.

- Encourage those stakeholders to ask the uncomfortable questions: “What can go wrong?” and “How will we address it?”

- Build for Scale

- As pilot projects transition to production, adopt the necessary technical governance tools, like model repositories and automated monitoring for bias, fairness, security, and accuracy.

- Continuously refine and evolve your governance processes to keep pace with new AI techniques (such as generative AI) and changing regulations.

- Don’t Let Governance Become a Barrier

- While governance helps prevent fiascos like “it’s the algorithm,” it shouldn’t stifle innovation.

- Instead, view governance as the enabling structure that clarifies roles, manages risk, and fosters trust—so your organization can confidently embrace AI for competitive advantage.

Conclusion: A Framework for Safe, Effective AI

The session ended with a reminder: AI governance might sound boring, but it’s the linchpin for harnessing AI’s power without jeopardizing trust, transparency, or accountability. If “governance” feels too heavy-handed, call it something else—like an “AI Empowerment Framework.” Whatever label you choose, the intent remains the same: to ensure that AI solutions align with organizational values, comply with regulations, protect stakeholders from harm, and ultimately deliver sustainable business value.

From the Apple Card controversy to the widespread adoption of automated model risk management in banking, real-world events make it clear that ignoring AI governance can have serious consequences. Yet far from being a corporate chore, well-designed governance can accelerate your AI initiatives, smooth the adoption of new tools and processes, and safeguard your organization’s reputation.

Key question to mull over: How will you structure your AI governance in a way that fits your company culture, meets regulatory obligations, scales gracefully, and remains agile enough to evolve alongside rapidly changing AI technologies and societal expectations?

Implementing AI responsibly is everyone’s job—but it starts with a well-defined operating model that gives each role clarity, oversight, and accountability. The next time someone dismisses governance as an unnecessary hurdle, remind them that it’s precisely what ensures you won’t be left explaining yourself in front of a judge, a regulatory agency, or a very angry co-founder (like Steve Wozniak) when “the algorithm” goes awry.