AI Cold War: US, EU, and China’s Quest for Dominance

EU Joins the War for Ai Dominance

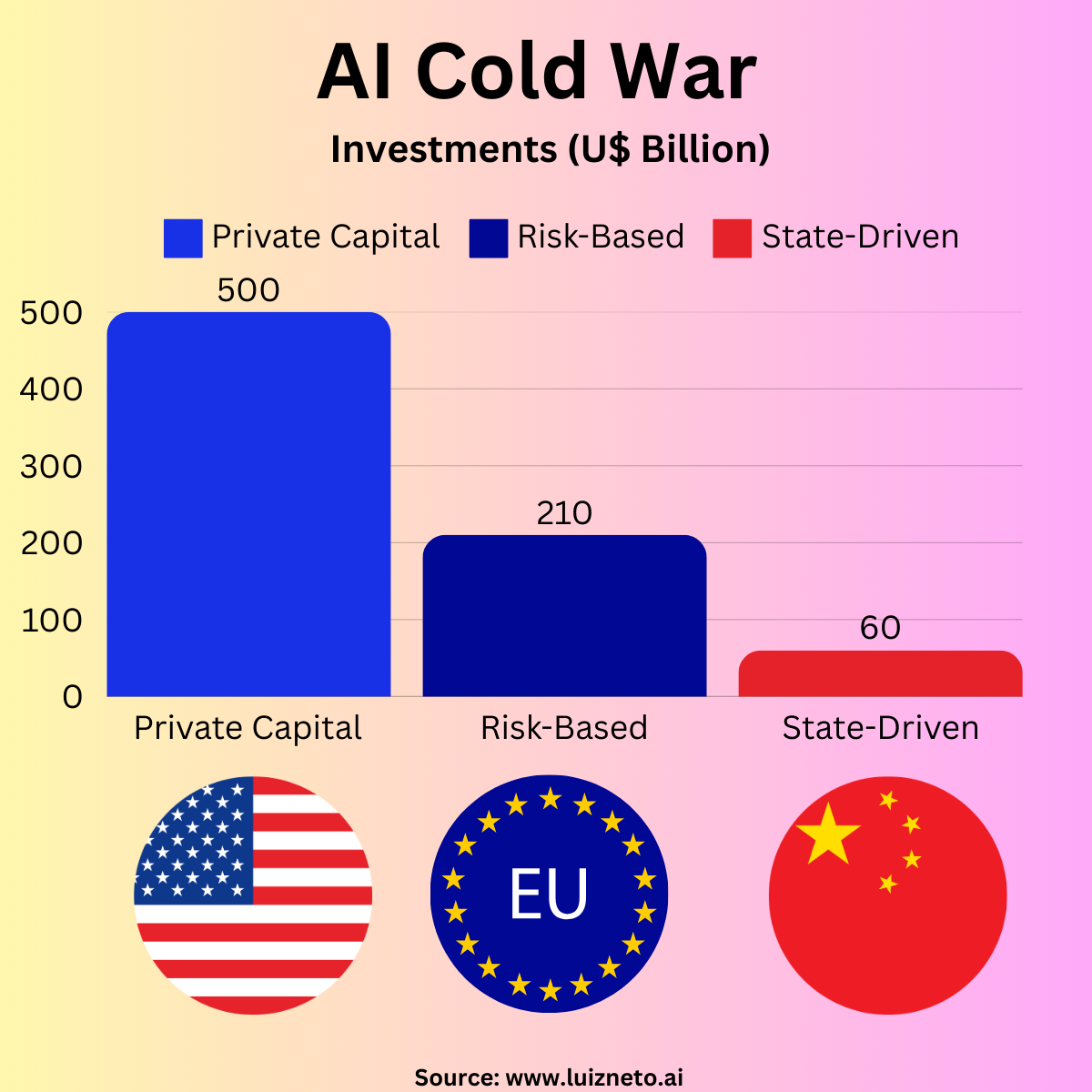

Artificial Intelligence (AI) stands at the intersection of technological progress and geopolitical strategy. The United States (US), China, and the European Union (EU) are each spending billions of dollars—sometimes in a single year—to secure leadership in this new era.

According to recent figures, the US poured a combined $74 billion into AI in 2023, with $63 billion coming from the private sector alone [1]. Meanwhile, China has invested $51 billion in data center infrastructure and $6.5 billion in private funding for AI [1].

The EU, aiming to establish a comprehensive ethical and regulatory framework, announced a colossal €200 billion plan to bolster AI capabilities [2].

If you are an enterprise leader, you want to harness AI to outpace the competition. The problem lies in scaling innovation across borders while navigating a web of diverging regulations and potential supply chain snags. Internally, you might feel constrained or uncertain about which global partnerships are safe, how to manage compliance, and where to find the right talent and data.

I understand these challenges; I’ve advised multiple Fortune 500 companies on data and AI initiatives, where regulatory complexities and geopolitical shifts can make or break a project’s success. In this blog post, I’ll distill the numbers behind this AI power struggle, explore top players’ strategies, and provide actionable steps to help you seize the opportunities hidden amid the tension.

1. Exploring Key Obstacles

1.1 Divergent Regulatory Climates

One of the most pressing challenges is the global patchwork of AI regulations. The US adheres to a loosely centralized framework, encouraging robust private investment, as evidenced by the $63 billion in private AI spending in 2023 [1].

China adopts a state-driven model that aligns AI initiatives with national security and strategic goals, requiring government approval for public release of major AI models [3].

Meanwhile, the EU’s AI Act uses a risk-based approach with strict oversight on high-risk applications, backed by a €200 billion investment plan [2].

For enterprise leaders, this scattered regulatory puzzle translates into operational complexity. If your business releases an AI product that functions seamlessly in the US, it may still violate the EU’s stringent “high-risk” classification guidelines.

Conversely, if you launch a system in China, you may need to pass extensive government reviews. Navigating these disparate regimes requires specialized expertise, often increasing compliance costs, delaying product rollouts, and potentially costing millions in lost opportunities.

1.2 Ballooning Infrastructure Investments

Money is flowing at record levels. The US “Stargate Project,” announced by OpenAI, aims to invest $500 billion over four years to build and expand AI infrastructure, particularly in data centers and compute resources [4].

China, for its part, is spending $51 billion on new data center infrastructure, plus an additional $6.5 billion in private sector AI funding [1].

On the European front, significant sums are earmarked for supercomputing under the Digital Europe Programme, alongside the €95.5 billion Horizon Europe research initiative, a substantial portion of which is devoted to AI [5].

These enormous sums not only reflect the scale of AI’s potential but also underscore the rising bar for market entrants. Smaller organizations sometimes struggle to keep pace with the computational and data-storage demands of advanced AI systems. This relentless capital injection from the “big three” regions—US, China, and the EU—can also skew global competition, as smaller nations or companies often lack the resources to innovate at scale, ultimately reinforcing the dominance of technology giants.

1.3 The Impending AI Job Upheaval

From a workforce perspective, the drumbeat of automation and AI-driven transformation is getting louder. Some estimates suggest that AI could eliminate up to 800 million jobs worldwide by 2030 [6]. Another projection indicates that AI-induced unemployment might hit 40–50% unless governments and industries develop robust reskilling and transition programs [7]. Such job displacement isn’t limited to low-wage positions; it can disrupt white-collar roles as generative AI models become adept at coding, research, and data analysis.

For enterprise leaders, this signals a dual challenge:

- Talent Attrition and Reskilling: While some roles vanish or transform, organizations must invest in upskilling employees, especially in data science, AI ethics, and machine learning engineering.

- Ethical Perception: Failing to address large-scale job losses or displacement can tarnish your brand. Conversely, a well-executed strategy that prioritizes retraining can bolster an organization’s reputation.

1.4 Cybersecurity Threats Escalate with AI

Generative AI is reshaping the threat landscape, making cyberattacks more sophisticated and scalable. The World Economic Forum’s Global Cybersecurity Outlook 2025 indicates that 66% of executives see AI as having a “substantial influence” on cybersecurity [8]. Yet only 37% of organizations have fully integrated AI security assessments into their deployment processes [9].

Such gaps in digital defense, especially for companies operating across multiple jurisdictions, can be catastrophic. State-sponsored espionage, IP theft, or AI model manipulation remain acute concerns in the context of an AI Cold War—where adversaries might exploit vulnerabilities to compromise supply chains and crucial data. The stakes only climb higher when advanced AI methods, such as deepfakes or AI-driven reconnaissance, can bypass traditional security protocols.

2. Leveraging the Global AI Race for Advantage

2.1 Risk-Based Governance as a Blueprint

The EU AI Act’s risk-based approach is rapidly becoming a global template. The Act classifies AI systems into tiers—ranging from minimal to unacceptable risk—and enforces stringent requirements for the higher tiers [2]. This model resonates with many corporations looking to unify compliance strategies. A key insight is to adopt an internal AI risk framework before it’s mandated.

- Practical Tip: Conduct a thorough threat modeling exercise or “AI safety check” for each project, evaluating data sources, model complexity, and end-user impact. Proactive alignment with frameworks like the EU AI Act can smooth expansions into European markets and maintain brand trust.

2.2 Tackling Infrastructure Challenges Through Collaboration

Overcoming the infrastructure gap often requires forging alliances. For instance, the UAE’s collaboration with France on a €30–50 billion investment in AI data centers includes advanced chip development and “virtual data embassies” to protect AI infrastructure [10]. This project not only positions France as a critical AI hub within Europe but also leverages the UAE’s quest to diversify its economy away from oil [10,11].

- Practical Tip: Explore cost-sharing agreements or collaborative R&D initiatives that allow you to tap into high-performance computing (HPC) resources without shouldering the entire expense. Consider multi-regional partnerships to bypass potential trade restrictions.

2.3 Balancing Aggressive R&D with Ethical Mandates

Innovation thrives in a flexible environment, illustrated by the US’s decentralized, sector-specific approach. This method fosters rapid growth and accounts for the nation’s $63 billion surge in private AI investments [1]. However, Europe’s emphasis on ethics and accountability can build public trust, attract certain investor groups, and create stable markets over the long term [2].

- Practical Tip: Determine the sweet spot between speed-to-market and compliance. If you’re in a high-risk domain like healthcare or autonomous vehicles, a more thorough ethics review could save you from crippling penalties or reputational harm later.

2.4 Minding the Talent Gap with Targeted Programs

China has integrated AI education into its national curriculum, ensuring a robust pipeline of AI-literate graduates [12]. The EU’s Digital Education Action Plan likewise invests in AI and digital skill-building to cultivate a broader workforce capable of implementing advanced solutions [13]. Meanwhile, US tech giants continue to lure top researchers with lucrative packages, leaving smaller players and other nations scrambling for talent.

- Practical Tip: Rather than passively suffering “brain drain,” design scholarship programs, sponsor AI hackathons, or provide career retooling options. Collaborate with universities to funnel well-trained graduates directly into your workforce. Alternatively, shape flexible policies (like remote work or job rotations) to appeal to a global talent pool wary of relocating.

2.5 Harmonizing Cyber Defenses Across Borders

International cooperation is crucial to tackling AI-driven cyber threats, especially as nation-states ramp up their capabilities. The International Network of AI Safety Institutes fosters cross-border dialogues on AI vulnerabilities [14]. Engaging in such alliances keeps you informed about the latest threat vectors and advanced defense mechanisms.

- Practical Tip: Leverage advanced encryption, zero-trust architectures, and continuous AI model monitoring to spot anomalies in real-time. Partake in global threat intelligence exchanges to cultivate a “collective defense” posture against increasingly sophisticated attacks.

3. Approaches by Global Tech Titans

3.1 $500 Billion Stargate Project in the US

OpenAI, with backing from SoftBank, aims to invest $500 billion over four years to further US AI infrastructure under the “Stargate Project” [4]. This massive infusion covers advanced data centers, frontier research, and the training of next-generation AI scientists. The US model—less centralized and more venture-capital driven—enables startups and research labs to flourish rapidly, though regulatory fragmentation remains a challenge.

3.2 China’s Government-Driven Blueprint

China’s Next Generation Artificial Intelligence Development Plan aspires to global AI leadership by 2030 [15]. The government invests heavily in R&D and fosters synergy between state agencies and national tech champions (like Baidu, Alibaba, and Tencent). With $51 billion earmarked for data center infrastructure, the country underscores its determination to solve the computing bottleneck [1]. This strategy yields swift implementation, yet concerns linger about creative freedoms under stringent oversight.

3.3 The EU’s Aspirational “Global Standard Setter” Role

The EU’s AI Act is not just an internal regulatory tool; it seeks to create a “Brussels Effect,” shaping AI governance worldwide [16]. Backed by €200 billion in AI funding commitments, plus the €95.5 billion Horizon Europe program, the region stands out for championing ethics, safety, and accountability [2,5]. While some European firms worry about rising compliance costs—up to 16% of EU AI startups consider relocating—others see an ethical brand advantage in global markets [17].

4. Real-World Examples of AI Adoption Amid Rivalries

4.1 The UAE-France Mega AI Campus

Problem: The French government sought a massive AI infrastructure project to help the EU keep pace with the US and China but lacked sufficient capital. Simultaneously, the UAE wanted to diversify its economy beyond oil by investing in emerging technologies.

Solution: In 2025, the UAE pledged €30–50 billion to establish Europe’s largest AI-focused data center in France [10]. This covered advanced chip design, specialized computing facilities, and the creation of “virtual data embassies” for secure data operations.

Results:

- France solidified its position as a major AI hub in Europe, anticipating hundreds of new high-skilled jobs and robust AI research collaborations [11].

- The UAE gained global AI influence, aligning with its long-term economic diversification strategy.

- The project aims to be powered primarily by renewable energy and nuclear sources, reinforcing sustainability commitments [18].

4.2 India’s Embrace of a Unique Regulatory Model

Although India is not often grouped with the US, EU, or China in AI leadership discussions, it provides a revealing case of “blended” governance. India joined 60 countries, including China and Brazil, in signing the “Inclusive and Sustainable Artificial Intelligence for People and the Planet” statement at the Paris AI Action Summit 2025 [19]. While India invests in AI through state-backed research bodies, it also allows private innovation to thrive.

Problem: India lacked a coherent AI governance framework to manage the technology’s impact on a population of over 1.4 billion people.

Solution: The country initiated targeted policies that draw on best practices from the EU’s risk-based system and the US’s market-driven approach, focusing on digital literacy, small business adoption, and scaling AI for national social initiatives.

Results:

- Millions more Indians gained access to AI educational resources, spurring local entrepreneurship in AI-based agriculture, healthcare, and digital services.

- While not as financially muscular as the US or China, India’s inclusive approach fosters grass-roots innovation that addresses local priorities, from poverty alleviation to urban planning.

5. Actionable Roadmap for Enterprise Leaders

5.1 Align AI Projects with International Risk Classifications

- Why: Regulators in the US, EU, and China categorize AI risks differently. Staying aligned cuts compliance costs and accelerates global rollouts.

- How: Develop an “Internal AI Audit Program” that ranks project risk levels (e.g., data sensitivity, potential societal harm). Adopt thorough documentation and regular model stress tests, reflecting the EU’s risk-based guidelines [2].

5.2 Set Up a Global Compliance Nerve Center

- Why: Fragmentation in AI policy means diverse, and sometimes conflicting, regulations.

- How: Form a multi-department “compliance nerve center” with legal, technical, and policy experts. Evaluate changing rules—like China’s new approval processes or US state-specific AI legislation—to adapt in near-real time [3].

5.3 Future-Proof Your Workforce

- Why: AI could displace up to 800 million jobs by 2030, fueling both organizational and social disruptions [6].

- How: Launch internal reskilling academies focusing on data engineering, machine learning operations, and AI ethics. Build ties with educational institutions to co-develop specialized curricula. Provide career transition support for roles impacted by automation.

5.4 Invest in Cyber-Resilience

- Why: Sophisticated AI-driven attacks can lead to massive financial or reputational damage, especially for global businesses.

- How: Allocate a dedicated budget to AI-specific security frameworks, including real-time anomaly detection and robust encryption. Engage with international AI safety networks like the AISI Network to stay abreast of emerging threat intelligence [14].

5.5 Diversify Infrastructure Partnerships

- Why: With $500 billion earmarked for the US Stargate Project, $51 billion from China for data centers, and the EU pushing for more HPC capacity, relying on a single region’s infrastructure can be risky [1,4].

- How: Evaluate multiple cloud providers across the US, EU, and possibly in partnerships like the UAE-France data center. This not only reduces lock-in but also mitigates operational interruptions if political tensions escalate.

5.6 Opt for Transparent and Ethical AI

- Why: A large percentage of consumers and policymakers increasingly favor responsible AI solutions. For example, 16% of EU AI startups are contemplating relocation due to compliance burdens, but those that adhere often gain ethical market advantages [17].

- How: Implement explainable AI (XAI) techniques. Document model decisions, data lineage, and potential biases. Introduce robust stakeholder feedback loops to uphold accountability.

Culmination of a Power Play

We are living in a moment where AI shapes not only technology but global power balances. The US invests tens of billions through private channels, with large-scale, high-risk bets like the $500 billion Stargate Project fueling fast-paced innovation [4]. China’s $51 billion push into AI data centers underscores its ambitions under the Next Generation Artificial Intelligence Development Plan [1,15]. Europe, meanwhile, sets a new regulatory and ethical bar, coupling a massive €200 billion AI investment with robust oversight laws [2].

For enterprise leaders, these collisions of policy, talent, and infrastructure may feel daunting—but they also open unprecedented opportunities. Whether you’re building the next AI-enabled healthcare solution, re-engineering supply chains, or simply automating routine administrative tasks, your strategic alignment with global developments can help you maintain a competitive edge. As these three blocs strive for AI hegemony, your organization’s path to success depends on balancing risk, forging cross-border alliances, and championing transparent, ethical AI innovation.

Subscribe to the newsletter 🙂

Subscribe to my newsletter and stay informed about critical Data & AI developments.

References

Below are the sources referenced throughout this post:

[1] https://gfmarafon.medium.com/global-ai-investment-landscape-59436cd39d0a

[2] https://awapoint.com/balancing-innovation-and-regulation-comparing-chinas-ai-regulations-with-the-eu-ai-act/

[3] https://nquiringminds.com/ai-legal-news/global-ai-legislation-a-comparative-overview-of-eu-us-and-china-frameworks/

[4] https://techstartups.com/2025/01/21/openai-launches-stargate-project-a-500b-company-backed-by-softbank-to-build-ai-infrastructure-in-the-u-s/

[5] https://cross-border-magazine.com/ai-investment-in-the-eu-vs-usa/

[6] https://summaverse.com/blog/addressing-job-displacement-due-to-ai-solution-and-strategy

[7] https://digialps.com/imf-reveals-ai-adoption-to-surge-permanently-displacing-workers/

[8] https://www.cnbctv18.com/technology/wef-global-cybersecurity-outlook-2025-report-urgent-action-generative-ai-cyber-threats-19539316.htm

[9] https://aicyberinsights.com/global-cybersecurity-outlook-2025-by-the-world-economic-forum/

[10] https://www.theregister.com/2025/02/08/uae_france_dc_ai/

[11] https://www.rasmal.com/uae-to-invest-up-to-e50-billion-in-ai-data-centers-in-france/

[12] http://www.moe.gov.cn/jyb_xwfb/s271/201804/t20180403_331083.html

[13] https://ec.europa.eu/education/education-in-the-eu/digital-education-action-plan_en

[14] https://thefuturesociety.org/aisi-report/

[15] http://www.gov.cn/zhengce/content/2017-07/20/content_5211996.htm

[16] https://www.brookings.edu/articles/the-eu-ai-act-will-have-global-impact-but-a-limited-brussels-effect/

[17] https://www.forbes.com/councils/forbestechcouncil/2025/01/23/the-eu-ai-act-a-double-edged-sword-for-europes-ai-innovation-future/

[18] https://opentools.ai/news/uae-makes-mega-ai-bet-with-euro50-billion-investment-in-french-data-center

[19] https://compass.rauias.com/current-affairs/inclusive-ai/